In early mission formulation, we often rely on optimization tools to explore the design space and identify a small set of promising architectures. These are often challenging multi-objective optimization problems with expensive and non-convex objective functions. The idea behind this research project is very simple. Part of the reason why it takes a long time to solve these optimization problems is that the optimization algorithms completely ignore that massive amounts of information and knowledge (heuristics, lessons learned, rules of thumb) that we (e.g., systems engineers at NASA) know about designing space missions. For example, I know that, in general, it is probably not a good idea to put several large and high-energy instruments on the same platform. Is there a way to leverage this heuristic? In the past, we have often relied on hard constraints to encode them. However, hard constraints are not the best representation for all such heuristics. In some cases, it may actually be a good idea to put several high-energy instruments on the same platform.

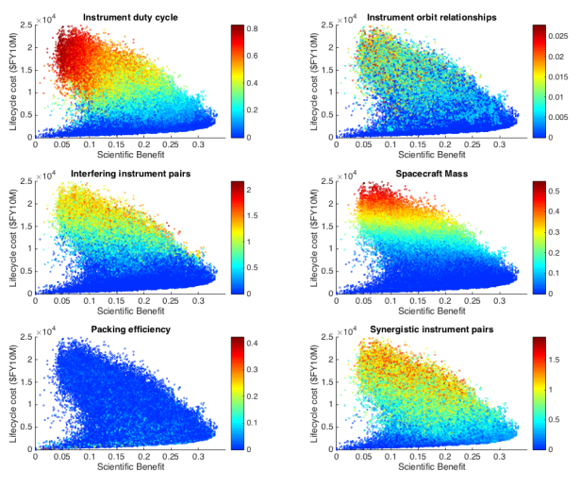

In addition, even perfectly reasonable heuristics that are useful for some problems are not that useful for other problems of the same type. The figure below shows how six reasonable heuristics about designing Earth observing missions have widely different correlations with good designs (i.e., those close to the Pareto frontier)

In this project, we explored several approaches to incorporate heuristics into mission design optimization, using soft constraints and bandit-based methods. Spoiler alert: the winner ended up being operator-based methods. Interested? Read more below.

This project consisted of three phases.

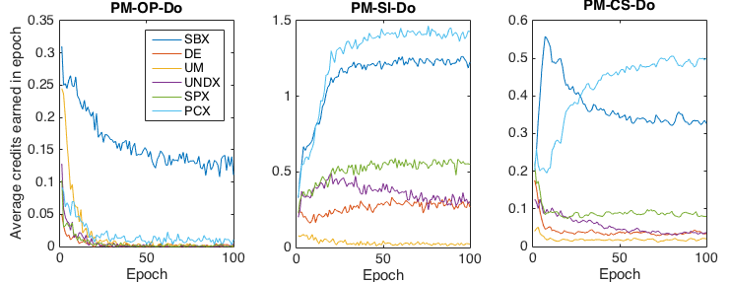

In Phase 1, we explored the theory behind Adaptive Operator Selection (AOS), a simple reinforcement learning approach from multi-armed bandit problems that we used to learn which operators work best for a given problem. We use AOS in the context of evolutionary optimization. Instead of using a single crossover and mutation operators, in AOS we maintain a pool of operators. We monitor the performance of the operators and assign credit to them when they generate good solutions. And then we select operators probabilistically based on the performance showed so far. That way we can learn which operators work best for the problem at hand.

We conducted a large computational experiment to show that AOS methods generally outperform state of the art evolutionary optimization algorithms when evaluated on a wide range of benchmark problems. You can check out the paper here:

Hitomi, N., & Selva, D. (2017). A Classification and Comparison of Credit Assignment Strategies in Multiobjective Adaptive Operator Selection. IEEE Transactions on Evolutionary Computation, 21(2), 294–314. https://doi.org/10.1109/TEVC.2016.2602348

In Phase 2, we encoded heuristics in the form of operators and used AOS to leverage them. We also compared the efficiency of the AOS technique against other methods based on soft constraints. Find out some more information here or in the paper below:

Hitomi, N., & Selva, D. (2018). Incorporating expert knowledge into evolutionary algorithms with operators and constraints to design satellite systems. Applied Soft Computing Journal, 66(May 2018), 330–345. https://doi.org/10.1016/j.asoc.2018.02.017

Finally, in Phase 3, we incorporated a data mining layer to learn new operators online. We used association rule mining and mRMR to extract new heuristics and the AOS technique to learn which ones are most useful. Find out more information here and check out the paper below:

Hitomi, N., Bang, H., & Selva, D. (2018). Adaptive Knowledge-Driven Optimization for Architecting a Distributed Satellite System. Journal of Aerospace Information Systems, 15(8), 485–500. https://doi.org/10.2514/1.I010595

This project was funded by the NASA NSTRF fellowship program.