Anyone who was been a user of a design optimization or design space exploration tool knows that the value of these tools, beyond the answer they give (i.e., the “preferred” design(s)), is in the information gained about the design problem during the interaction with them. In fact, we are all familiar with how good optimization algorithms are at finding things that are wrong with our models. So, we use such tools within an interactive and iterative process of model refinement, search, and data analysis.

In the data analysis step, the user explores the datasets outputted by the search algorithm and attempts to answer questions such as “what, if anything, do good designs have in common?” For example, in a problem about designing constellations of Earth observing satellites, it may be that good constellations all have two synergistic instruments together, or avoid certain problematic instrument-orbit pairings.

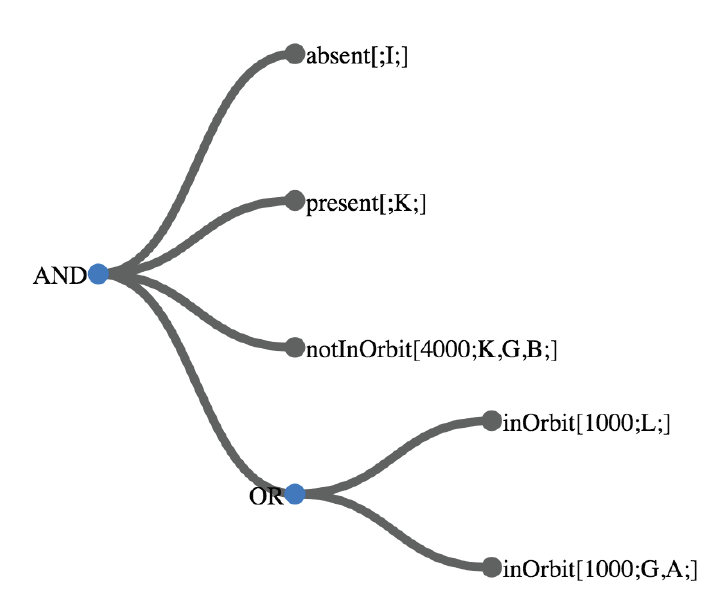

Slightly more formally, a feature is some design attribute shared by a subset of designs in the design space. It is a (binary) predicate function that can be defined as a logical expression of variables that involve design decisions and other features. The figure below shows an example of a feature.

The question of how to find interesting features depends on how we define “interesting”. Ideally, an interesting feature would be easy to understand by humans, and would have very high predictive power, although these two attributes are often at odds. Furthermore, predictive power is also a multidimensional attribute: a feature may be very general (meaning that most designs in the target region have that feature) or it may be very specific (meaning that all designs that have the feature are in the target region), but it’s hard or impossible to find an ideal feature that would be both general and specific.

In general, this problem can be formulated as a feature extraction problem. There are many feature extraction methods, ranging from fully manual methods like simple visualization and trial-and-error (e.g., color-coding designs according to a candidate feature) to fully automated methods such as association rule mining. However, both approaches lack scalability for different reasons –inherent human limitations, and the curse of dimensionality respectively.

Our lab is exploring collaborative approaches to feature extraction that make the most of the advantages of humans and machines. iFEED (interactive feature extraction for engineering design) is one such method, in which a human interacts with an agent running an automated feature extraction algorithm (e.g., the apriori algorithm, or maximum relevance minimum redundancy). The human plays various roles at different levels of abstractions, ranging from proposing “classes” of features to explore (predicate functions that take arguments) to actively participating in the search by trying new specific features. Below is a video that shows an example interaction with a basic iFEED agent and tool.

One of the contributions of this work was the development of a new visualization that we called feature space chart. A feature space chart shows the design features in a 2d space of importance measures (typically, precision and recall, or support and confidence, but these can be selected by the user). A study with human subjects showed that when users who have been exposed to design space exploration topics interact with the feature space chart, they learn more about the design problem than when interacting with a design space chart.

Currently, we are exploring various other aspects of feature space exploration, including:

- The development of new automated feature extraction algorithms based on genetic programming, generalization operators, and adaptive operator selection.

- Exploring the use of cognitive assistance for feature space exploration.

Publications:

[1] H. Bang, Y. L. Z. Shi, S.-Y. Yoon, G. Hoffman, and D. Selva, “Exploring the Feature Space to Aid Learning in Design Space Exploration,” in Design Computing and Cognition’18, 2018.

[2] M. Law, N. Dhawan, H. Bang, S.-Y. Yoon, D. Selva, and G. Hoffman, “Side-by-side Human-Computer Design using a Tangible User Interface,” in Design Computing and Cognition ’18, 2018.

[3] Lily Shi, H. Bang, G. Hoffman, D. Selva, and S.-Y. Yoon, “Cognitive style and field knowledge in complex design problem solving: A comparative case study of decision support systems,” in Design Computing and Cognition ’18, 2018.

[4] H. Bang and D. Selva, “Leveraging Logged Intermediate Design Attributes For Improved Knowledge Discovery In Engineering Design,” in Proceedings of the ASME 2017 International Design Engineering Technical Conferences & Computers and Information in Engineering Conference IDETC/CIE 2017, 2017, vol. 2A–2017.

[5] H. Bang and D. Selva, “IFEED: Interactive feature extraction for engineering design,” in Proceedings of the ASME Design Engineering Technical Conference, 2016, vol. 7.